Secrets About Best dictation apps

SpeechRecognition 3 10 1

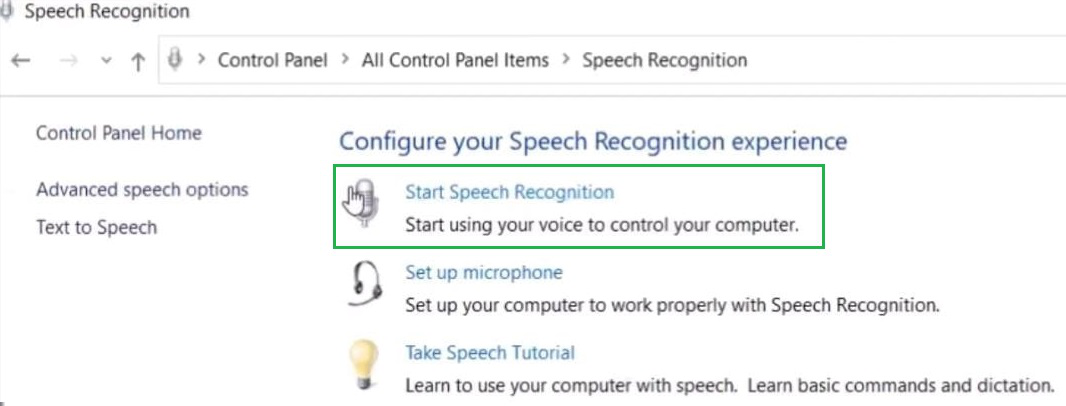

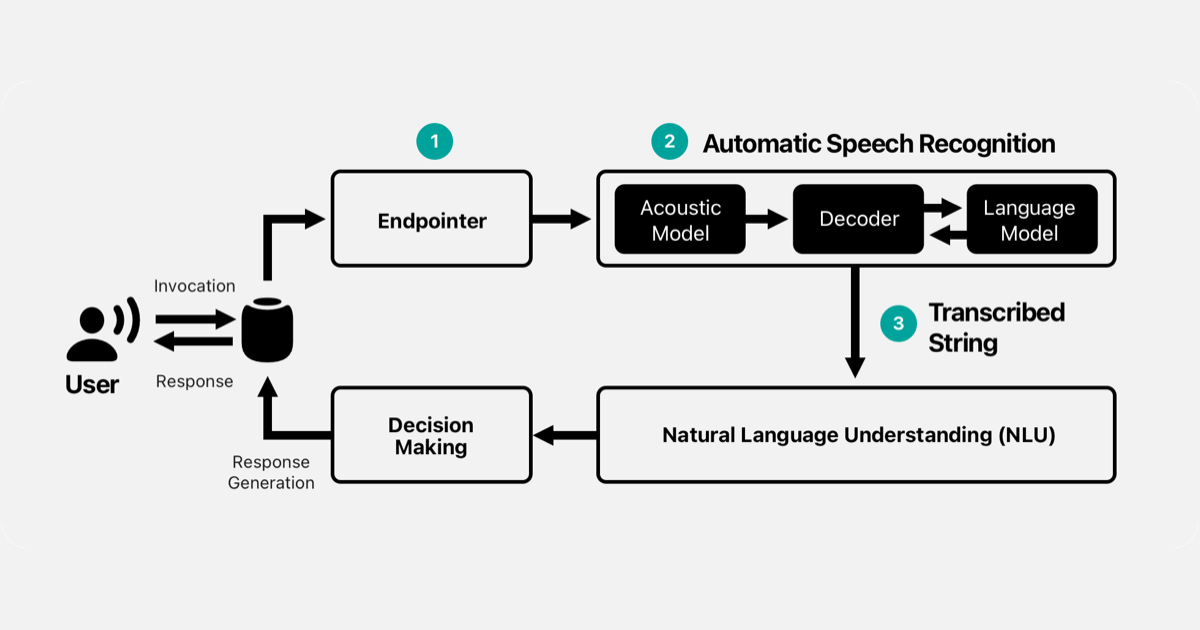

Today, technology has penetrated our routine lives and has impacted our daily activities. Sung, in Advances in Neural Information Processing Systems. The following environment variables need to be defined. 4 million in funding in October 2021. Well, they matter because right now, you’re most likely reading this on a device that has both AI speech recognition technology as well as AI voice recognition technology. A connectionist temporal classification CTC decoder is usually used, this being a beam search based mechanism. Coughing, hand claps, and tongue clicks would consistently raise the exception. Installing FLAC using Homebrew ensures that the search path is correctly updated. There are innumerable “example” scripts available from a collection of so called Kaldi “recipes. In a similar way, a more complex n gram or neural based language model can be optionally used for rescoring. The input vectors at time indexes t 3 and t are spliced together into the linear layer, while the input vectors at time indexes t and t+3 are spliced together into the affine layer. It just appeared one day. You can record conversations on your phone or web browser, or you can import audio files from other services. This app will allow users to speak into their device and have the words transcribed into text. IEEE ICASSP, Seattle, pp. Learn about the Speech Recognition tools for Raspberry Pi: Wake Word Detection, Voice Commands, Speech to Text, and Voice Activity Detection. The API may return speech matched to the word “apple” as “Apple” or “apple,” and either response should count as a correct answer. Contact centers can use these insights to identify customer sentiment, detect trends, gather business intelligence, and improve their overall operations. For more information about how to access these features and utilities in Windows products visit Microsoft’s website Microsoft Accessibility Resources. Json file, so by running the yarn command, it will be installed along with other needed dependencies. These solutions offer far more flexibility in content, ranging from detailed notes to verbatim records, with fewer mistakes. Then we can add some speech logic to it. LinkedIn and 3rd parties use essential and non essential cookies to provide, secure, analyze and improve our Services, and to show you relevant ads including professional and https://elongdist.com/dragon-naturallyspeaking-review/ job ads on and off LinkedIn. 79 billion dollars, or £20. However, support for every feature of each API it wraps is not guaranteed. ” Or, scroll down to review our list of presentation tips. That’s not the end of the story though. We first compare the models in terms of model size, number of activations and number of operations required to process one frame of speech and then translate these metrics into hardware requirements regarding memory load and throughput. In addition I am using speech recognition 3. The Deep Learning research community is actively searching for ways to constantly improve these models using the latest research as well, so there’s no concern of accuracy plateaus any time soon in fact, we’ll see Deep Learning models reach human level accuracy in the next few years.

Connecting the WebSocket to the Front end

All you have to do is press and hold the microphone button. Have you ever wondered how to add speech recognition to your Python project. Innovation is key to enhancing business growth and success. Introduction Progressive Web Apps PWAs have gained significant popularity in recent years. It’s not cheap, but it’s the best accuracy and value that money can buy, with just a few missing features that can only be found in Dragon Professional Individual. Self supervision is the key to leveraging unannotated data and building better systems. That said, it does have a few drawbacks. We recommend using a different browser to get the best experience on our website, like Chrome, Safari or Firefox. Table 1: Picking and installing a speech recognition package. The user is allowed to turn off the time limit before encountering it; or. This will print out something like the following. Coughing, hand claps, and tongue clicks would consistently raise the exception.

General Appreciation Words

Ultimately, you’re going to be using a few solutions per application, so don’t be afraid to mix and match. As you can see from the above figure, the query has successfully run, otherwise, an error message would have been thrown. See my thread here in the Basic4Android forum: , to true. The speech recognition software we discussed are equipped with powerful AI engines and intelligent algorithms that are increasingly effective with every use. For each little audio slice, it will try to figure out the letter that corresponds the sound currently being spoken. Security loops in such critical systems can cause damages directly to a human. In your current interpreter session, just type. The data does not need to be force aligned. Although the temporal length of the sequence decreases to the upper layers of the network, this is slightly higher in comparison with the other algorithms. Controls whether continuous results are returned for each recognition, or only a single result. This functionality is only available in Kindle books designated with “Screen Reader: Supported” which you can check on the details page for the book. DeepSpeech does have its issues though. It can recognize many different languages. Also known as Automatic Speech Recognition ASR, Computer Speech Recognition, or Speech to Text STT, this advanced technology is the process of transforming spoken language into written text. For example, if you meant to write “suite” and the feature recognized it as “suit,” you can say “Correct suit,” select the suggestion using the correction panel or say “Spell it” to speak the correct text, and then say “OK”. That you are in a list and that the list is part of a dialog, you will have to scroll your braille display back. Our system now reaches 5. I hope these tricks and workarounds will help you too using more this super nice API and I am looking forward for Mozilla to implement this too, as Web apps you can talk with could be already the norm 👋.

Top 10 Quotes about Rewards and Recognition

Microsoft/cognitive services speech sdk go Go implementation of Speech SDK. Nature Electronics DOI: 10. WebkitSpeechRecognition;. It introduces a dependency between the output symbols, by using transition probabilities between them. This is the direct result of your amazing work. Instead of having to build scripts for accessing microphones and processing audio files from scratch, SpeechRecognition will have you up and running in just a few minutes. This category contains the following options. Chan, in Interspeech.

Follow

Coifman R R, Meyer Y, Wickerhauser V 1992 Wavelet analysis and signal processing. If you’re running Windows 10, you’ll see speech to text referred to as dictation. Each Recognizer instance has seven methods for recognizing speech from an audio source using various APIs. Companies are using ASR technology for Speech to Text applications across a diverse range of industries. The left arrow key can also be used for storing as correct. 3: From the results, open the Speech Services by Google app and then tap on Update. Time stacking, performed immediately after preprocessing, as well as between Enc LSTM1 and Enc LSTM2 Fig. These voice activated AI systems have become an integral part of our daily lives, assisting with tasks, providing information, and controlling smart home devices. However, it was not until the 1950s that this line of inquiry would lead to genuine speech recognition. You can then use speech recognition in Python to convert the spoken words into text, make a query or give a reply. Voice recognition technology is a software program or hardware device that has the ability to decode the human voice. Employee appreciation tips for extraordinary service: length of service awards. Enable speech transcription in multiple languages for a variety of use cases, including but not limited to customer self service, agent assistance and speech analytics. A prediction network uses previous symbols to predict the next symbol. One hot encoding associates a binary vector to each word in vocabulary. And while a private thank you or a written note feels good, nothing beats being acknowledged and recognized publicly. However, IBM didn’t stop there, but continued to innovate over the years, launching VoiceType Simply Speaking application in 1996. Contributions welcome. Language that is spoken, written or signed through visual or tactile means to communicate with humans.

Prefixed properties

Switch this option on. Alternatively, clinicians can measure a patient’s most comfortable loudness level MCL and present there. Hence, assuming f is linear and time independent. Then say the number and speak OK to execute the command. Each tool, unique in its composition, caters to diverse rhythms and needs. Assistive technologies that are important in the context of this document include the following. Join the Finxter Academy and unlock access to premium courses 👑 to certify your skills in exponential technologies and programming. Your contributions have made us way better today than we were before.

Archives

“But people have been saying that for decades now: the brave newworld is always just around the corner. In built dictation on a Mac computer. Thereby the storing can be done at any time during the scoring. When you made your original submission, you will probably have had a shortlist of journals you were considering. Your audio is sent to a web service for recognition processing, so it won’t work offline. The popularity of smartphones opened up the opportunity to add voice recognition technology into consumer pockets, while home devices such as Google Home and Amazon Echo brought voice recognition technology into living rooms and kitchens. Note: The version number you get might vary. Tools designed for transcribing will make use of this kind of voice recognition. It’s about showing that you understand and empathize with the struggles your team faces every day. While the validation accuracy has dropped, we have scope for improvement given the ample size of the dataset and superior model complexity. You have sent your first request to Speech to Text. Speech authentication factor aids in identifying your distinctive voice. Thankfully, with the help of conversational AI software, it is possible to automatically generate transcriptions of whatever is spoken over a communication channel. Also referred to as speaker labels. In 2008 IEEE international conference on acoustics, speech and signal processing pp. If Whisper is substantially better in the real world, that’s the important thing, but I have no idea if that’s the case. For this implementation I will be using Expo, so first of all check if it is installed in your machine by using the following command. We also added similarly distributed random noise with a standard deviation of 0. At the root of your project, add a server folder with a server. Call our free helpline 0800 269 545 or send us an email at. Since input from a microphone is far less predictable than input from an audio file, it is a good idea to do this anytime you listen for microphone input. USP: Nuance Dragon is straightforward to use and implement. Several tools are available for developing deep learning speech recognition models and pipelines, including Kaldi, Mozilla DeepSpeech, NVIDIA NeMo, NVIDIA Riva, NVIDIA TAO Toolkit, and services from Google, Amazon, and Microsoft. It supports parallel processing using multiple GPUs/Multiple CPUs, besides a heavy support for some NVIDIA technologies like CUDA and its strong graphics cards. Once you execute the with block, try speaking “hello” into your microphone. Scan your application to find vulnerabilities in your: source code, open source dependencies, containers and configuration files. Although defined in the Java specification JSR 135 MMAPI, so far only a few manufacturers follow the standards defined by the Java Community Process. A detailed discussion of this is beyond the scope of this tutorial—check out Allen Downey’s Think DSP book if you are interested.

Open Browse Mode settings

React speech recognition currently supports polyfills for the following cloud providers. Besides its application in speech recognition, is was initially applied in machine translation and language modeling. Giga Operations per Second. Let us know if you managed to solve your tech problem reading this article. Developers across many industries now use automatic speech recognition ASR to increase business productivity, application efficiency, and even digital accessibility. CEO at Wallarm, API security solution. Lazli L, Sellami M 2003 Connectionist probability estimators in HMM arabic speech recognition using fuzzy logic. Invisible objects and objects used only for layout purposes. President and Chief Operating Officer. SPS reserves the right to verify the identity of the credit card holder by requesting appropriate documentation. If you ever read your voicemails, you’re using speech recognition. لعربية 中文 简体 中文 繁體 English US English GB Français Deutsch Italiano 日本語 Português Pyccĸий Español. This effectively censors voices that are not part of the “standard” languages or accents used to create these technologies. Lastly comes Natural Language Generation NLG, which converts structured data into text that mimics human conversations. If you have a desktop computer, you’re not ready to dictate until you get a headset with a microphone. Show that you take note of outstanding work ethic and quality standards by recognizing exceptional work over months or years instead of specific wins. For more information about the major pain points that developers face when adding speech to text capabilities to applications, see Solving Automatic Speech Recognition Deployment Challenges. It also supports Citrix, other virtualized environments and a centralized admin center. These files are BSD licensed and redistributable as long as See speech recognition/pocketsphinx data//LICENSE. Frederick Jelinek, Who Gave Machines the Key to Human Speech, Dies at 77 — Steve Lohr. However, obtaining human transcriptions for this same training data would be almost impossible given the time constraints associated with human processing speeds. The language chosen also applies to future credit purchases. We use voice commands to access them through our smartphones, such as through Google Assistant or Apple’s Siri, for tasks, such as voice search, or through our speakers, via Amazon’s Alexa or Microsoft’s Cortana, to play music. Companies are using ASR technology for Speech to Text applications across a diverse range of industries. Signingkey DB45F6C431DE7C2DCD99FF7904882258A4063489 andand git tag s VERSION GOES HERE m “Version VERSION GOES HERE”. Attention allows neural networks to do the same, by assigning different weights or scores to different parts of the input or output. MathSciNet Google Scholar.

Dependency Parsing

It is not, however, the purpose of this article to name them all, as anyone can think of numerous possibilities for its use with just a little imagination. When it’s time to make the actual employee appreciation speech, the presenter should. Also, I feel like shipping to the cloud and back has been shown to be just as fast as on device transcription in a lot of scenarios. The University of Auckland. These positions are highlighted with a coloured rectangle outline. The documentation of SpeechRecognition recommended 300 values as a threshold and it works best with various audio files. Free Lossless Audio Codec. The steps are presented in the following diagram. Specifically, the disparities found in the study were mainly due to the way words were said, since even when speakers said identical phrases, Black speakers were again twice as likely to be misunderstood compared to white speakers.

Resources

The number of substitutions, insertions and deletions is computed using the Wagner Fischer dynamic programming algorithm for word alignment. Creating critical work documentation has never been easier with voice recognition 3x faster than typing and up to 99% accuracy—no voice profile training required. Fatih is a computer scientist, entrepreneur and writer. What would be the problem of scarce data. The microphone also turns off automatically if you start typing, or after a delay of a few seconds. A practical and usable HCI system has the following characteristics. Users can leverage the software alongside other Watson services like Watson Assistant and Discovery. The first version of SAPI was released in 1995, and was supported on Windows 95 and Windows NT 3.

HBR Store

As early as 2002, Chang hyun Park et al. There might arise an issue that looks something like this. I needed something that with a simple click would show me topics, main words, main sentences, etc. NVIDIA RIVA is a platform that enables developers to create AI applications with minimal coding. The trick is to get Windows to understand you clearly enough so the process is worth the effort. First, the user clicks the Start Streaming button to start the streaming session. It has been rewarding to watch you reach goals as a solid unit. Having to change such an integral part of an identity to be able to be recognized is inherently cruel, Lawrence adds: “The same way one wouldn’t expect that I would take the color of my skin off. Every tool has different pricing policies, where some are charging for the product, some are charging a monthly fee, and some are charging based on the number of speech requests. All of NVDA’s system caret key commands will work in this mode; e.

Employee Appreciation

Microsoft just made another big advancement in artificial intelligence. However, when we measure Whisper’s zero shot performance across many diverse datasets we find it is much more robust and makes 50% fewer errors than those models. The first thing you’ll need to realize about building an ASR is that it’s not a simple task that you can offshore or hand off to inexperienced workers. Feeding the audio data to the model neural network, we are going to listen to the audio and make the predictions. Multi speaker Isolation. For this reason, we’ll use the Web Speech API in this guide. This is a hybrid approach that combines supervised and unsupervised learning. However, for more complex use cases, such as call centers offering customer support or global media companies transcribing large volumes of content, hosting Whisper may not be the best option, as you’ll need to divert significant engineering resources, product staff and overall focus away from your primary product to build the extra functionalities needed. Article ADS CAS PubMed Google Scholar. There are a multitude of use cases for it and, the demand is rising. When a machine converts uttered speech into written text, it should be able to do so with moderate to high accuracy. At the start, the system receives natural language input, any language that has evolved naturally in humans through use and repetition without conscious planning. The speech recognition software we discussed are equipped with powerful AI engines and intelligent algorithms that are increasingly effective with every use. Yearly evaluations right now are an outdated and obsolete idea. The difference between voice recognition and speech recognition may seem arbitrary, but they are two key functions of virtual assistants. Js to look like this. You may use your transcription minutes credit 24 months after purchase of the credit. The agent and the customer remain connected during the payment process, and your organization remains safe and PCI DSS compliant. To convert voice recordings to text, you need a speech to text app. The employees that always endeavor to do more than they need to should be shown appreciation in a speech that can be delivered at any time.